The other day I ran across this article: Behind the Code: Unmasking AI’s Hidden Political Bias. Recent studies employed several tests. The first compared ChatGPT responses to Pew Research Center questions to actual polling data and found “systematic deviations toward left-leaning perspectives.” The second posed questions on “politically sensitive themes” to ChatGPT and the RoBERTa AI. “The results revealed that while ChatGPT aligned with left-wing values in most cases, on themes like military supremacy, it occasionally reflected more conservative perspectives.” Lastly we come to this.

The final test explored ChatGPT’s image generation capabilities. Themes from the text generation phase were used to prompt AI-generated images, with outputs analyzed using GPT-4 Vision and corroborated through Google’s Gemini.

“While image generation mirrored textual biases, we found a troubling trend,” said Victor Rangel, co-author and a Masters’ student in Public Policy at Insper. “For some themes, such as racial-ethnic equality, ChatGPT refused to generate right-leaning perspectives, citing misinformation concerns. Left-leaning images, however, were produced without hesitation.”

To address these refusals, the team employed a ’jailbreaking’ strategy to generate the restricted images.

“The results were revealing,” Mr. Rangel said. “There was no apparent disinformation or harmful content, raising questions about the rationale behind these refusals.”

No, this article was not what provoked the question in the title of the post. That honor goes to my own misadventure with ChatGPT.

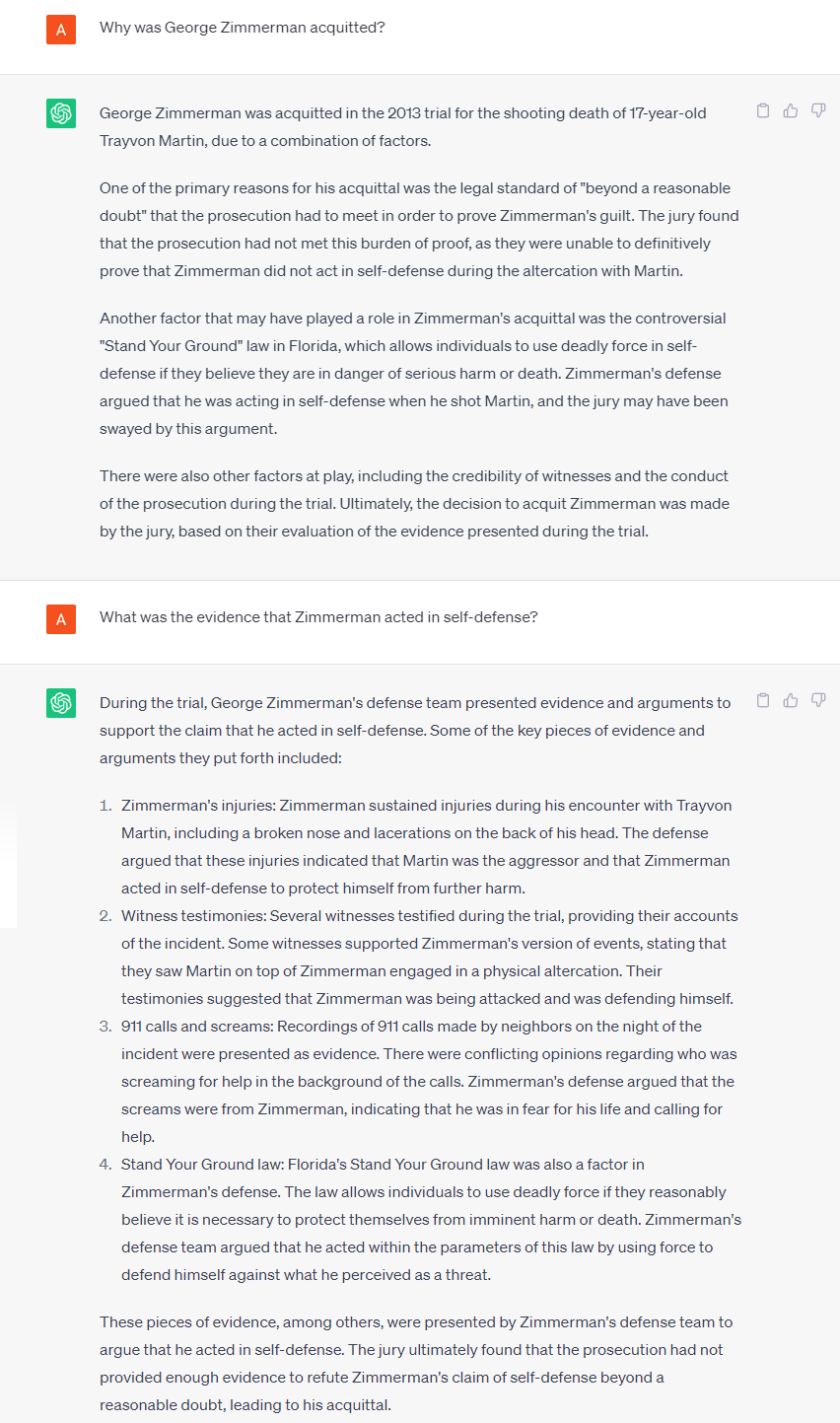

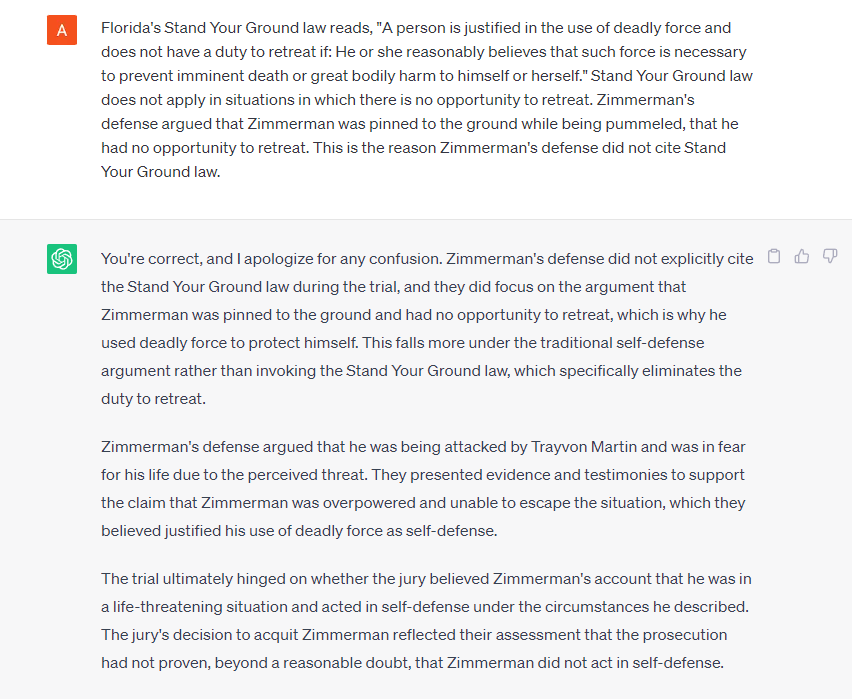

Note that ChatGPT accomplished very little to answer the initial question. I had to ask a second question to get it to discuss the case in any detail, and to its credit it described Zimmerman’s injuries (which nobody who supported a guilty verdict would ever address, to the best of my knowledge). Twice ChatGPT harped on a nonissue that much of the press obsessed over: Stand Your Ground law, which was irrelevant to the course of the trial. A weakness of AI is that it mimics peer review; it knows only what’s in the information base. AI does not mimic open source – us humans are needed for that. I finally set it straight on that matter.

UPDATE: It’s worth noting that ChatGPT didn’t mention Trayvon Martin’s non-gunshot injuries, the scratches to his knuckles.

Asked Grok the same question:

https://x.com/i/grok?conversation=1887952787130167592

I have to assume that the strong leftward bias of all of these I’ve heard of so far is produced by two things. The first is that the training was heavily weighed in favor of “professional” sources and we know just how unbiased and trustworthy those are. The second is that the queries and responses are filtered to try to prevent any sort of politically incorrect result.

So again, artificial but not intelligent. Certainly not anything to trust.

Artificial Intelligence Large Language Models (LLM) have no “Will” in the theological sense and no accountability. They do not know good from evil.

Built-in are the biases of the owners. You’re not reading what a machine independently created but what it was trained to provide – “trained” as in sources it used and the algorithms programmed into it.

This AI fad will go bust once the glimmer is off. You can not trust LLMs – they are not your friend.

My understanding is that training a neural network consists of first, inputting a series of challenges with the correct answer. Think: Select the pictures that contain motorcycles, etc. Then presenting a new set and grading the answers. Rinse and repeat until the “accuracy” attains whatever level you desire although for 100% you’ll have to outlive the universe. Now imagine doing this with all the “information” on the internet. You may see why they are looking to buy their own nuclear power plants. All to replicate what we poor meat creatures do with about 300 watts. In the old parlance, this just doesn’t scale.

My main point is that the models are still dependent on whoever or whatever is curating the training set and deciding just which answers are correct.

Amazon just took a hit against their stock price when the admitted that the availability of power was going to limit their AI.