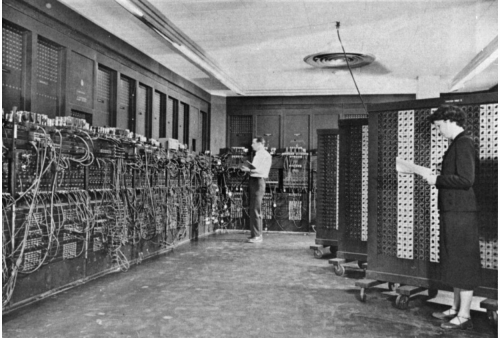

In February 1946, the first general purpose electronic computer…the ENIAC…was introduced to the public. Nothing like ENIAC had been seen before, and the unveiling of the computer, a room-filling machine with lots of flashing lights and switches–made quite an impact.

ENIAC (the Electronic Numerical Integrator and Computer) was created primarily to help with the trajectory-calculation problems for artillery shells and bombs, a problem that was requiring increasing numbers of people for manual computations. John Mauchly, a physics professor attending a summer session at the University of Pennsylvania, and J Presper Eckert, a 24-year-old grad student, proposed the machine after observing the work of the women (including Mauchly’s wife Mary) who had been hired to assist the Army with these calculations. The proposal made its way to the Army’s liason with Penn, and that officer, Lieutenant Herman Goldstine, took up the project’s cause. (Goldstine apparently heard about the proposal not via formal university channels but via a mutual friend, which is an interesting point in our present era of remote work.) Electronics had not previously been used for digital computing, and a lot of authorities thought an electromechanical machine would be a better and safer bet.

Despite the naysayers (including RCA, actually which refused to bid on the machine), ENIAC did work, and the payoff was in speed. This was on display in the introductory demonstration, which was well-orchestrated from a PR standpoint. Attendees could watch the numbers changing as the flight of a simulated shell proceeded from firing to impact, which took about 20 seconds…a little faster than the actual flight of the real, physical shell itself. Inevitably, the ENIAC was dubbed a ‘giant brain’ in some of the media coverage…well, the “giant” part was certainly true, given the machine’s size and its 30-ton weight.

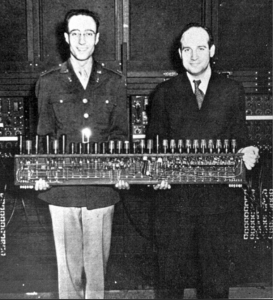

In the photo below, Goldstine and Eckert are holding the hardware module required for one single digit of one number.

The machine’s flexibility allowed it to be used for many applications beyond the trajectory work, beginning with modeling the proposed design of the detonator for the hydrogen bomb. Considerable simplification of the equations had to be done to fit within ENIAC’s capacity; nevertheless, Edward Teller believed the results showed that his proposed design would work. In an early example of a disagreement about the validity of model results, the Los Alamos mathematician Stan Ulam thought otherwise. (It turned out that Ulam was right…a modified triggering approach had to be developed before working hydrogen bombs could be built.) There were many other ENIAC applications, including the first experiments in computerized weather forecasting, which I’ll touch on later in this post.

Programming ENIAC was quite different from modern programming. There was no such thing as a programming language or instruction set. Instead, pluggable cable connections, combined with switch settings, controlled the interaction among ENIAC’s 20 ‘accumulators’ (each of which could store a 10-digit number and perform addition & subtraction on that number) and its multiply and divide/square-root units. With clever programming it was possible to make several of the units operate in parallel. The machine could perform conditional branching and looping…all-electronic, as opposed to earlier electromechanical machines in which a literal “loop” was established by glueing together the ends of a punched paper tape. ENIAC also had several ‘function tables’, in which arrays of rotary switches were set to transform one quantity into another quantity in a specified way…in the trajectory application, the relationship between a shell’s velocity and its air drag.

The original ‘programmers’…although the word was not then in use…were 6 women selected from among the group of human trajectory calculators. Jean Jennings Bartik mentioned in her autobiography that when she was interviewed for the job, the interviewer (Goldstine) asked her what she thought of electricity. She said she’d taken physics and knew Ohm’s Law; Goldstine said he didn’t care about that; what he wanted to know was whether she was scared of it! There were serious voltages behind the panels and running through the pluggable cables.

“The ENIAC was a son of a bitch to program,” Jean Bartik later remarked. Although the equations that needed to be solved were defined by physicists and mathematicians, the programmers had to figure out how to transform those equations into machine sequences of operations, switch settings, and cable connections. In addition to the logical work, the programmers had also to physically do the cabling and switch-setting and to debug the inevitable problems…for the latter task, ENIAC conveniently had a hand-held remote control, which the programmer could use to operate the machine as she walked among its units.

Notoriously, none of the programmers were introduced at the dinner event or were invited to the celebration dinner afterwards. This was certainly due in large part to their being female, but part of it was probably also that programming was not then recognized as an actual professional field on a level with mathematics or electrical engineering; indeed, the activity didn’t even yet have a name. (It is rather remarkable, though, that in an ENIAC retrospective in 1986…by which time the complexity and importance of programming were well understood…The New York Times referred only to “a crew of workers” setting dials and switches.)

The original programming method for ENIAC put some constraints on the complexity of problems that it could be handled and also tied up the machine for hours or days while the cable-plugging and switch-setting for a new problem was done. The idea of stored programming had emerged (I’ll discuss later the question of who the originator was)…the idea was that a machine could be commanded by instructions stored in a memory just like data; no cable-swapping necessary. It was realized that ENIAC could be transformed into a stored-program machine with the function tables…those arrays of rotary switches…used to store the instructions for a specific problem. The cabling had to be done only once, to set the machine up for interpreting a particular vocabulary of instructions. This change gave ENIAC a lot more program capacity and made it far easier to program; it did sacrifice some of the speed.

In 1950, the first experiments in computerized weather forecasting were carried out. While trajectory calculations require that time be divided into small intervals, weather forecasting also requires the subdivision of space, and hence is more computationally-intensive. The eminent scientist John von Neumann was deeply involved in this work, and his wife, Klara, worked both on the transformation of ENIAC into a stored-program machine and on the specific weather application. (It’s interesting that Klara’s mathematics teacher back in Hungary had given her a passing grade only because he was impressed that she openly admitted she didn’t understand anything of what was being taught! Yet she was by all accounts an important contributor to the ENIAC project.) The 20-number memory capacity of ENIAC required that vast quantities of punched cards be employed for the capturing and re-entry of intermediate results; mainly because of all this reshuffling, the ENIAC forecasts could barely keep up with the actual weather.

Also run on the stored-program ENIAC was a nuclear critical-mass (fission) calculation using the ‘Monte Carlo method’, in which the behavior of individual neutrons was randomized but the overall result was predictive and replicable. (This work had previously been done by hand, with simulated neutrons moved across a large sheet of paper for successive tiny intervals of time.) Indeed, it was indeed the need for this calculation that spurred the conversion of ENIAC to a stored-program machine…an example of ‘user-driven innovation’, as historian Dr Thomas Haigh has pointed out.

In 1946, the inventors Eckert and Mauchly left U-Penn as the result of a patent-rights dispute and soon formed their own computer company, which later became UNIVAC (corporate descendent UNISYS.) A patent was issued certifying the pair as the inventors of the electronic digital computer. Honeywell, then a computer manufacturer, got tired of the substantial royalties it was paying and brought a lawsuit in 1967 to invalidate the patent. In 1973, Judge Earl Larson ruled ruled in Honeywell’s favor, cancelling the patent in part because of earlier work done by Dr John Atanasoff at Iowa State College. ( Atanasoff had indeed built an truly innovative electronic computer; it was, however, a special-purposed device, dedicated entirely to solving simultaneous linear equations, and could not have been reprogrammed for other applications as was ENIAC)

I don’t think there’s any evidence that the designers of ENIAC were aware of or influenced either by Alan Turing’s theoretical work on computing or by the British COLOSSUS, an electronic (and highly secret) machine which was specialized for codebreaking, as important as these activities were in their own rights.

The idea of stored programming is generally credited to John von Neumann, but it is possible that Eckert and Mauchly had previously proposed the idea…this is certainly what Eckert maintained in his article in A History of Computing in the Twentieth Century and also what Mauchly said in a 1979 letter reproduced here. Some of Adele Goldstine’s ideas in her writeup on ENIAC may also have played a part in the emergence of the idea. The paper that defined the stored program concept in abstract terms, and that quickly became a classic, was indeed largely von Neumann’s work. Dr Haigh notes that this document defines a ‘minimalistic’ approach to machine architecture, citing St-Exupery’s dictum about perfection: “Perfection is achieved, not when there is nothing more to add, but when there is nothing left to take away.” Interestingly, von Neumann defined the concepts of the archetypal computer in terms which owed more to biology than to electronics; ‘neurons’, for example. This may have been done in part to avoid classification restrictions on the document’s circulation.

Picture below is of Klara and John von Neumann, and Adele and Herman Goldstine.

Concerning the specific conversion of ENIAC to a stored-program machine, this effort was spurred by Nick Metropolis of Los Alamos based on the need to automate the Monte Carlo fission calculations and a wind-tunnel problem brought by Richard Clippinger of the Aberdeen Proving Ground, with Adele Goldstine, Jean Bartik, and Klara von Neumann among those doing the work.

There has been much interest in recent years in ‘the women of ENIAC’…Adele Goldstine and Klara von Neumann are rarely mentioned among these accounts, probably because they were not members of the original programming team. The programmers had a difficult job and ENIAC was a complicated machine to learn, but some accounts have over-emphasized this point, suggesting that no one had ever thought about how it could be programmed prior to its being constructed, which was incorrect..indeed, it would have been highly irresponsible to embark on the project without such thinking. And Jean Bartik notes in her memoir how helpful Mauchly and other designers were to the original programmers, even if their interactions weren’t formalized as a training class. In any event, it is good that this team has finally been recognized.

There were a *lot* of relationships and marriages among the project participants (Adele Goldstine was Herman Goldstine’s wife, for example)…today, many organizations have policies that would have deterred or prohibited these relationships.

It is rather remarkable that ENIAC got implemented at all, given its radical nature and the resource-short wartime economy. Goldstine gives much credit to Colonel Paul Gillon…”an extremely competent, energetic officer, who understood a great deal about technical matters…He knew a lot, and he was a person who, if he put his faith in you, he put it in unreservedly.”

Goldstine also credited Army culture: “The one thing that I admired during the war was how very effectively the Old Boys’ Club of West Pointers operated. It was a beautiful thing to watch. These men trusted each other, or at least they knew what von Neumann used to call the “mendacity factor” of each one of their colleagues. They knew how much to trust each person, and if they believed in a man, as convinced they believed in Gillon, there were no problems about getting money and approvals. The normal military tempo was slow, as all government bureaucracies are. The reason this thing went very fast was because Gillon was immediately convinced by us.”

This was originally going to be a much shorter post written last year, but as I got more into the ENIAC history and the anniversary date approached, it got longer. I do wonder what the original developers and programmers of ENIAC would think if they could see some of today’s applications of computer technology, such as the talking-cat video filter.

Some good sources for anyone wanting to learn more about this project and its influences:

–Historian Thomas Haigh has done a great deal of research and writing about ENIAC history; his book, ENIAC in Action (co-authored with Mark Priestley and Crispin Rope) is an indispensable source. The book has a website, which includes many useful links such as Los Alamos Bets on ENIAC, a description of the nuclear Monte Carlo simulations.

Haigh and Priestley also make the interesting point that when people today talk about ‘the women of ENIAC’, they don’t tend to consider the 34 wiremen, technicians, and assemblers who actually built the machine, most of whom were in fact women. The historians emphasize that there were a lot of people critical to this project–and to any project–in addition to the engineers and programmers who tend to get most of the glory.

–Jean Bartik wrote a very readable memoir, titled Pioneer Programmer, about her journey from farm girl to ballistics calculator to ENIAC programmer and then on to other jobs in the emerging computer industry. Not at all shy about expressing her strong opinions about individuals: for example, she didn’t like Herman Goldstine at all (though she liked Adele) but was devoted to Mauchly, who she chose to give her away at her wedding. She also has some negative things to say about the compiler pioneer Grace Hopper, later Rear Admiral Grace Hopper. Many amusing anecdotes, such as the ‘Dress for Success’ seminar that misfired when she was working in a later job, and some parts not amusing at all, such as the influence of McCarthyism on some of the individuals and on the industry, are included in the memoir.

—Kathleen McNulty Mauchly Antonelli was one of the original ENIAC programmers; she became John Mauchly’s second wife after his first wife, Mary, died in a swimming accident. Her linked memoir is from 2004.

—A History of Computing in the Twentieth Century, by Metropolis, Howlett, and Rota, is a 1980 collection of essays on early computers and software development efforts.

—A short video on ENIAC. The numbers over the indicator lamps (ping-pong balls cut in half) were not part of the original design but were added by Mauchly to help demo attendees understand what was going on.

—The Computers, available for rental on Vimeo, is a documentary focused on the original six programmers. Interviews with these women (circa late 1990s, I think) coupled with vintage photos and newsreel footage. (One commentator wondered how long it would take before ENIAC-like machines would be checking up on your income tax. Not sure he took the possibility seriously, but the answer turned out to be…as near as I can determine, about 14 years.

—The University of Pennsylvania also has an anniversary post with several pictures of ENIAC and some of the people involved; it also includes a picture of three women operating a mechanical differential analyzer–when used for the trajectory work, a differential analyzer took about 30 minutes to calculate a single trajectory (compared with about 20-40 hours for a human), but was somewhat less accurate…also, the analyzers had an irritating habit of breaking down just before a run was finished. See my post on mechanical analog computers: Retrotech–with a future?

–The original ENIAC patent is here and includes a very detailed description of the machine’s circuitry and operations. The inventors point out that there has been a very large set of important problems which could not be addressed by analytical methods (ie, the algebraic transformation of symbols in equations) but which required tremendous step-by-step computational efforts…as examples, they mention Hartree’s work on the structure of the atom, which required computations extending over fifteen years and a then-contemporary problem involving the supersonic wind tunnel at the Aberdeen Proving Grounds.

There are still plenty of problems which cannot be solved by today’s much-faster computers in realistic (or even non-astronomical) timeframe; this is the primary driver for the interest in quantum computing.

ENIAC represented numbers in decimal format instead of the the binary format that has become universal. This is somewhat responsible for the large size of the components. It consumed 150 KW of power and it looks like it was around as fast as the original IBM PC, the wide decimal format and dedicated math hardware gives it an edge over the 8 bit PC that helps to make up for the slow 100 KHz) clock rate. The 10 digit decimal registers are slightly larger than 32 bits.

I wonder if there is enough generating capacity in the U.S. to run an ENIAC version equivalent to a smart phone.

Time for a real world Butlerian Jihad. A world in which the likes of Gates, Zuckerberg, etc., who however you want to phrase it are socially odd, if not outright impaired, get obscenely rich and powerful building tools that are a tyrant’s fantasy, is not one to celebrate, or one that has any sort of decent future. I suspect the 21st century is going to make the 20th look like paradise. The question is if it’s possible to pull back. I don’t see how.

MCS…probably depends on what the smart phone is doing. If you have it solving ENIAC-type mathematical problems, the answer is probably a lot lower in power terms than if you are trying to run an ENIAC version the encompasses the graphics processing on the phone.

“I suspect the 21st century is going to make the 20th look like paradise.”

A lot can happen in 100 years. Look at the 20th Century. Queen Victoria was still alive at the beginning of the 20th Century, and Russia was still under the Czars. Still in the future in 1901 lay the Ford Model T, the airplane, the polio vaccine, the nuclear reactor, global telephony, the computer, man on the Moon, the cell phone. By the end of the 20th Century, the sun had set on the British Empire (and the other European empires too), and the English had reluctantly given Hong Kong back to a rapidly industrializing China. And more people on Planet Earth were living longer, better lives as public health, medicine, and nutrition all improved over the century.

Yes, the bit in the middle of the 20th Century was a downer. But the long term trend over the 20th Century was clearly positive. Matt Ridley’s book “The Rational Optimist” makes the case that this pattern of fluctuations about a long-term improving trend has been repeated century after century.

It is just our horrible luck to have to face one of those downward fluctuations over the next few decades. But perhaps that is our appropriate punishment for having taken our collective eyes off the ball in the last half century.

Brian…A lot of people in the 1930s thought the only possible outcomes for the US would be Russian-style Communism or Italian-style Fascism (or worse)…and that the then-modern technologies of telecommunications, motor transport, and aviation would ensure that once the boot was planted, it would stay planted forever. Very fortunately, it didn’t work out that way.

After Hiroshima and Nagasaki, and throughout the Cold War, a lot of people thought that a nuclear war between the US and the Soviet Union was inevitable. Didn’t happen.

There is plenty of reason to be concerned about the use of computer & communications technology for surveillance and information-control; pushback, though, seems to be building momentum.

150KW is about 170 HP whether it was doing anything or not, a pretty big electric bill. To come up with a really accurate answer is more work than I want to do unless I was getting paid.

A rough answer: Compared very roughly to an iPhone 12. The single core clock rate at 3 GHz is 30,000 times the ENIAC. It’s really hard to find a number for how much power it uses and it obviously includes the screen and all the peripherals. So I’m going to WAG it at about a watt. 150,000 x 30,000 gives a factor of 4.5 billion so 4.5 billion x 150,000 watts gives 675,000 GW. A quick search says that U.S. capacity was a little more than 1,000 GW in 2019. So not even close.

Of course, without the graphics, not much of a smart phone. I suppose if I had the code, I could run it on something like a arduino which would avoid all the overhead. There are literally hundreds of processors to choose from with all sorts of clock rates and with and without floating point hardware. Power consumption measured in mW (thousandths of a watt).

Maybe a fairer comparison would be to something like a TI programmable calculator but it would still show how far things have progressed while there still exist seemingly simple problems that are beyond the capability of present hardware.

MCS…somebody programmed a restored IBM 1401 (vintage 1960) to do bitcoin mining. He calculates that to mine one bitcoin block (worth about $6000 at the time he wrote the article) on this machine would take about 40,000 times the current age of the universe.

http://www.righto.com/2015/05/bitcoin-mining-on-55-year-old-ibm-1401.html

ENIAC somewhat faster for this application, I think, so maybe only about 4,000 times the current age of the universe.

I wonder how the amount of energy being used for mining bitcoin, ethereum etc compares with the amount of energy used for real, physical gold mining.

Yes, David, I read Witness a few decades ago and thought, haha, those silly people who thought Communism was going to win. But I don’t think that means we are in the clear. Above all else, demographic implosion is a unique phenomenon that strongly differentiates us from a few generations ago. Not just that an aging society has serious challenges to face, but the fact that people have stopped having children shows that something (or lots of somethings) is seriously wrong with our society. The total collapse of most small towns, and local industry, also demonstrates that our society is far more fragile than it was even 50 years ago. And I don’t see any way to describe the fusion of the Democrat party with Big Tech in the last few years as anything other than fascist.

“There is plenty of reason to be concerned about the use of computer & communications technology for surveillance and information-control; pushback, though, seems to be building momentum.”

Does it? I don’t see any real sign of it. I said last spring and summer that I wasn’t that concerned with covid control measures because people wouldn’t tolerate them being too extreme or too long lasting. I was clearly very wrong.

Brian…re the demographic implosion, I just read a short book called ‘Depopulation’ by Philip Auerswald and Joon Yun. Their argument is that (a) reduction in childbearing occurs in all societies as they become more affluent, and (b) this has major implications for investors. (The focus of the book is really the investment implications)

I’ll probably do a post and discussion thread on this topic sometime soon; it’s an important one.

According to at least once source, the Eckert/Mauchly proposal went initially to Dr John Brainerd of the electrical engineering department, whose response was along the lines of ‘interesting…we may have to do something like this if the labor shortage gets worse.’ It was only when a mutual friend mentioned the idea to Goldstine that rapid action started happening. In a ‘remote work’ environment such as those that have become common over the last year, it seems unlikely that this idea transfer would have happened; indeed, if Mauchly had been attending the summer seminar over a Zoom equivalent, he might well have not ever heard of the trajectory problem in the first place.

I’ve been personally involved in several important product/market initiatives which happened only because of casual conversations in hallways or over drinks. There are a lot of benefits to remote work, but much of importance can be lost as well.

(Brainerd apparently did do an excellent job in helping to drive ENIAC construction once the project was kicked off)

Whatever the current state of affairs may look like, the long-term trends do not bode well for centralized control of anything. It doesn’t work, never has, never will. Complexity and rapid response to rapidly changing conditions militate against centralization and control.

What we need is less centralization, less control, and more flexible cellular structures. The big tech firms are already turning into dinosaurs, inflexible doctrinaire centers of stasis and ossification. You can’t keep doing the same things over and over again, expecting that this time, it’s gonna work. Because, it won’t.

Look at the difference between SpaceX and NASA; nimble little flexible organizations outdoing the big static ones, every time. Does anyone think that Google will be around in a generation? Will any of the other tech giants manage to even last as long as IBM did?

Lasting success isn’t going to come from the monolithic top-down model. It never has, and it never will. What does succeed, over the long haul, is the small cellular structures that can grow, thrive, and die without killing the overall structure they’re part of. The control freaks want to control everything, but what they’re doing is the equivalent of scleroderma, where the body starts calcifying and turning to stone. Once the process takes over, the entity has started its death throes.

You can’t fight it. All you can do is minimize the issue, and you do that by eschewing these vast structures we’re so damn fond of. NASA would be better off if it had remained like the NACA organization it superseded, merely managing development monies and picking projects for civil firms to actually engineer and run.

Kirk…re centralization, the current vogue for ‘big data’ is going to drive a lot of this, often leading to situations like those sometimes seen in chain stores with a ‘push’ inventory system in which local management has little or no input as to what is ordered…sometimes with extreme results like the snow blowers in south Florida, more often, less-visible erosions of effectiveness & efficiency.

See Zeynep Ton’s ‘The Good Jobs Strategy’ for discussion about how some of the expensive inventory-control systems actually work out in practice:

https://chicagoboyz.net/archives/60771.html

continuing re Centralization…

A business friend was fond of the phrase ‘synergy costs money’ Synergy is very seductive, however: it’s ofen easy to make a case that combining activities X and Y and Z is more efficient than having X and Y and Z done by separate teams….it is harder to show, or even to identify, what gets lost when centralization happens.

A case in point is Jean Bartik’s experience at UNIVAC after that company had been acquired by Remington Rand. It surely most have seemed that the best way to sell this new computer was to use the large sales force that was already in place (selling typewriters, punched card machines, etc), bringing in people from the technical staff as necessary for sales support, rather than to create a whole new sales organization. But Bartik’s experience was that the sales reps, at least the ones she interacted with, were doing very well selling the traditional product line, and their main interest in the UNIVAC system was getting the customer to schedule a meeting..so that the rep could sell them more typewriters.

Urban renewal was big government coming in with one-size fits all destruction of cities large and small. In general it is now agreed that these policies destroyed cities all over the country. And yet in nearly every instance nothing has risen out of the ashes. When the government (or big business, which by now have merged pretty effectively) obliterates the small and local, it seems to do a pretty good job of basically salting the earth behind it. I’m not at all optimistic that there is a way to pull back from the cult of big.

“Big” and “Small” *can* sometimes be a worthwhile combination…in drug development and medical devices, for example, it’s not uncommon for a company to start with some seed funding, go through some angel and VC rounds to the level of maybe $10-20MM invested in the company, and then get sold to a much larger player that has the manufacturing capability, the distribution network, and the funding to carry the product through human trials if that is required.

Back in the ’50’s, my father was a bystander to an early computer inventory disaster. The system worked by having an IBM card for each item. The idea was the as each item was drawn out, the card would be sent to the home office where the card would be processed and another item with a new card returned. What could go wrong? In short, everything imaginable and quite a lot that hadn’t been. They had to return to monthly physical inventory within months.

Target’s conquest of Canada was turned into a rout of Napoleon fleeing Moscow proportions because of a bad inventory system.

Some people think that stores like Home Depot and Wal-Mart assign customer service a low priority. This is wrong, they don’t consider customer service at all.

To err is human, to screw it up at light speed takes a computer.

MCS…I wrote about the Target Canada debacle in 2016; the ‘Canadian Business’ article that I linked is still up. Absolutely horrifying.

Particularly noteworthy:

““Business analysts (who were young and fresh out of school, remember) were judged based on the percentage of their products that were in stock at any given time, and a low percentage would result in a phone call from a vice-president demanding an explanation. But by flipping the auto-replenishment switch off, the system wouldn’t report an item as out of stock, so the analyst’s numbers would look good on paper.”

As I said at the time, yet another example of poor incentive-system design. The same people who are judged on in-stock percentages should also be judged on revenue and profitability for their items. Then any gamesmanship with out-of-stock will show up in the weak sales caused thereby.

When designing incentive systems, it is wise to think about what *direction* the incentive pulls people in, and then add a countervailing incentive in a different direction. Measure your sales reps only on revenue, and you will get a lot of sales of low-margin but easy-to-sell products.

You can screw up incentive systems just fine without any computers (as the Soviets demonstrated), but computers can certainly ‘help’

That’s probably where I read it in the first place, I’ll have to admonish my bibliographer.

I think the rule for incentive systems is that they always produce the opposite of what’s wanted. For some reason the people being incentivized tend to work it for the most return with the least work. This always comes as huge surprise to those that set them up.

On incentives, we have been visiting my daughter in law and grandkids the past few days. She works for a company that puts on meetings. She has been very successful but, needless to say, the Covid panic has badly hurt their business. She has recently been required to attend “Racism sessions” online. One employee posted a picture of himself with a sheep image on a tee shirt with a circle/slash suggesting “No sheep.” The HR director forced him to delete the photo but another employee with a Bernie tee shirt and a “vote” sticker was approved.

I wonder if the people running these companies consider the loss of half the country a problem ?

It’ll be just like the broadcast networks, a huge surprise when the audience, or customers, as the case may be, disappear.

Whenever my annual sexual harassment training comes up, I always ask around to find out who complained that I was doing it wrong. Probably a good thing I’m not in the comedy business.