A friend and I have been making day trips to the San Francisco Bay Area. Sometimes the destination is set when we’re halfway there. Usually, we know before we leave. Some things in San Francisco seem as they were when we remembered them 60 years ago. Other things seem bizarre and foreign.

Last summer, one of those trips turned to the bizarre. We did something I’ve wanted to do for years park the car in Vallejo on one side of the Bay a good 30 miles from San Francisco and take the commuter ferry. You solve the parking problem and for the princely sum of $11 round trip (the senior rate!) you get a nice 45 minute ride on the bay past the silent cranes of the old Mare Island Naval Shipyard and under the Richmond-San Rafael Bridge – to the old ferry building on the Embarcadero.

The boat moored and we were surprised to find a lot of restaurants in the old ferry building. There was even a restaurant specializing in caviar offering one plate for $850! Only in San Francisco, I thought, but maybe you’d see something like that in Manhattan. Must have been 10-15 restaurants.

We chose the restaurant specializing in Mexican food and got some tacos. For considerably less than $1700 (for 2!).

Afterwards we decided to walk down the embarcadero to Fisherman’s Wharf.

We passed all of the old empty shipping terminals with the pier number on front. Must have been 20 or so. I was trying to imagine what those looked like in the 50s, with passenger ships in and the terminals full of suitcases, taxis, porters, and happy people going out on a venture or returning to loved ones. Now, except for one that had one of those new “mega cruise ships” all were empty. One of the warehouses was used as an indoor parking lot.

Along the way I saw something I wasn’t accepting for the moment. You know, when your eyes are reporting this to the mind but the mind is laughing, telling you “it can’t be. Try again, and send me something believable!“

It was an older man, with long gray hair, completely naked. He was suntanned all over. And I mean all over. Which isn’t an easy feat being in San Francisco.

Know how you can spot the tourists in the summer there? They are dressed in summer clothes. Anyway, he is strolling down the embarcadero seemingly without a care in the world. And just as strange, nobody on the walkway is giving him a second glance. I made the observation to Inger that most people who wish to flaunt their nudity in public really shouldn’t.

Anyway, my preamble is meandering to the main subject of today’s post, but I wanted to tell you all of a memorable past trip. We’ve had others, perhaps the subject of a later post. Some great ones and some duds.

A few weeks ago I wrote of another trip in which we saw the (alleged) beginnings of Google at Buck’s Restaurant down the Peninsula at Woodside. After lunch we went to the Google complex in Mountain View. It was so large I have come to call this area Googleville. Googleville is not simply some industrial park, but an area that seems to go on….and on. Like our trip visiting Facebook on the shores of the Bay right by the Dumbarton Bridge in Menlo Park, I wondered just why one needed something so huge with so many people – for something as relatively simple as an advanced program, lots of servers, and a billing department for the advertisers. Of course, you need people to develop algorithms to decide how one sees the ads they do (and why am I seeing ads on Facebook for a theater in Pennsylvania being on the west coast?) but if you visited either of these complexes, you’d wonder why they are small cities within cities. And perhaps you need people to refine the algorithms as to how users find things. Google’s search algorithm, which revolutionized the Internet, is a closely guarded secret which I am sure is evolving. But why do you need 150,000 employees?

But then, I’m not a multi-billionaire.

So last week, we returned to the outskirts of Googleville to see the Computer History Museum. I didn’t think Inger, hardly a computer geek, would find it interesting, but I was wrong.

Think modern computer history starts in the late 1940s?

You would be wrong.

I’m just giving you a very brief highlight of this museum’s exhibits, leaving out 99.9%. I thought the best way to write the rest of this post is to give you a virtual tour, just as I saw it. So I’m sequentially scrolling through my iPhone’s camera roll.

Years ago, I heard what I thought was a wonderful description of the electronic computer. That is, it is the only machine Man invented for which there is no pre-determined end purpose.

The User defines the purpose.

Here’s another one from the museum:

“We become what we behold. We shape our tools, and then our tools shape us.”

—-Marshall McLuhan

We start with an explanation of the 1st 2,000 years of computing, with one exhibit of an ancient Greek Antikythera:

The Pre-Electronic Computer Age

A CLASSICAL WONDER:

THE ANTIKYTHERA MECHANISM

Divers exploring a Roman shipwreck off the Greek island of Antikthera in 1901 found the remains of a baffling device. Fifty years later scholars slowly began to unlock its ancient mystery.

The Antikythera mechanism is the oldest known scientific calculator. A complex arrangement of over 30 gears could determine with remarkable precision the position of the sun, moon, and planets, predict eclipses, and even track the dates of Olympic Games.

This remarkable 2,000-year-old device has revolutionized our understanding of the ancient Greeks’ abilities both their scientific acumen and their craftsmanship.

More here.

There were a number of exhibitions from the Revolutions section, nothing of course electronic, but mechanical implements and tables to aid the user in his work. This is from Elizur Wright, considered the father of life insurance, to use as an aid in making insurance calculations. It was invented in 1869.

I’ve always felt that the insurance industry was interesting, whether it is from the pesky people calling you offering automobile extended warranty insurance, life insurance, or any other kind of insurance. They have risk tables, built up over experience and are betting that you will not need it within their projected window and the consumer is betting that he will need it. Which seems, if you think about it, a bit depressing. Betting that you are going to have an accident, die, or break down before they believe it will occur. An insurance consumer should rejoice if their fears become unrealized and don’t have to collect!

Ever wonder if people consider you a nerd? (Never mind the fact that these days some of the nerds are billionaires). This miniature tie clasp in the form of a slide rule was a popular retirement gift for engineers in the 1950s.

Ever wonder why one calls the kitchen working surfaces a “counter”?

…This merchant’s table abacus or “counting table”

served as both calculator and shop counter.

…COUNTERS BECOME COUNTERTOPS!

Up through the 1700s, the tabletop abacus or counting board was widespread in Europe.

Shopkeepers traditionally faced their customers across the device as they added up purchases.

This “counting board” evolved into the English word “counter” to describe the working surface in a store, and later any working surface-like kitchen counters.

What was really the impetus to electronic computers started back in 1890, with America’s census.

…Punched cards got their first big test in America’s 1890 census. Half a century later, they made possible another census: a comprehensive catalogue of British flora and fauna. Documenting the island’s ecology demanded technology both portable and reliable.

Punched cards fit the bill.

IBM really began its own genesis with the purchase of Herman Hollerith’s Tabulating Machine Company. It was Hollerith’s machine that revolutionized the counting of the 1890 census.

Herman Hollerith’s first tabulating machines opened the world’s eyes to the very idea of data processing. Along the way, the machines also laid the foundation for IBM.

… The Census Bureau put Hollerith’s machine to work on the 1890 census. It did the job in just two years, and saved the government US $5 million. After that, Hollerith turned his invention into a business: the Tabulating Machine Company. By 1911, Hollerith’s tabulator had been used to count the populations of Austria, Canada, Denmark, England, Norway, the Philippines, Russia, Scotland and Wales.

… Not only could the machines count faster, but they could also understand information in new ways. By rearranging the wires on a tabulating machine, the contraption could sort through thousands or millions of cards. Businesses soon realized that the information on those cards didn’t have to be about members of the population—the data could be about a product, or an insurance customer, or a freight car on a rail line. The tabulator allowed users to learn things they never knew they could learn, and at speeds no one thought possible. (Bold characters mine)

As an aside, the earliest computer terminals paid homage to IBM’s standard 80-colume card. The terminals were 80 columns wide.

My first professional programming job was writing and maintaining programs charting aircraft production for Cessna Aircraft in Wichita, Kansas. I felt that I would be working for a company who could afford the latest in equipment and they were, with an IBM Mainframe. And they had one of the latest (at the time) IBM mainframes a 4341. But the custom software written in COBOL (Common Business Oriented Language) was rooted in the 1960s, when card punches (and readers) were in widespread use. And as I was to learn, that is common, even today. Large companies have too much invested in the software over the years, written and modified many times to adjust for changing circumstances to simply throw it all away and start from scratch. I once had to make a change to a Cessna production program that was written in the 60s and 20 years later (my time) had the names of 20 programmers who, over the years, made changes to it.

Anyway, with some of the programs in the 1960s, the input was done in 80 column format. With an IBM card punch. You would put an “X” in the appropriate column and the card punch would make the card. Instead of a card punch during my time they used terminals for input but not in the way we would expect today. The terminal screen had a basic 80 column layout where the operator would type the “X” onto the screen, in the appropriate column on the terminal.

By the way, have you ever wondered why the largest computers are called “Mainframes”? (probably not until I raised the question 😉 )

Electronic Computers from the 1940s…

WHY ARE THEY CALLED “MAINFRAMES”?

Nobody knows for sure. There was no mainframe “inventor” who coined the term.

Probably “main frame” originally referred to the frames (designed for telephone switches) holding processor circuits and main memory, separate from racks or cabinets holding other components. Over time, main frame became mainframe and came to mean “big computer.”

When I started programming, micro computers were just starting. One of the mainframe computers I used for learning was an IBM System 370/135. In the 60s, IBM had a family of computers ranging in power, all under the “System 360” family. In the 70s, they came out with the System 370. All software was backwards-compatible of course. In the early 70s, California’s massive Bank of America at its San Francisco headquarters used a high end IBM 370/168 to talk with its 100s of branches around California and even the thousands of ATMs. If I remember correctly, it had 128 MB of memory. A PC today has far more than that.

By the way, this was before computer screens – all communication was done via teletype.

THE FIVE BILLION DOLLAR GAMBLE

IBM’s revenue in 1962 was $2.5 billion. Developing System/360 cost twice that. Company president Tom Watson, Jr. had literally bet the company on it.

Watson unveiled System/360 in 1964 – six computers with a performance range of 50 to 1, and 44 new peripherals – with great fanfare. Orders flooded in. The gamble paid off.

“System/360…is the beginning of a new generation not only of computers but of their application in business, science and government.’

This particular CPU, or Central Processing Unit, was the Model 30 within the new 360 line. It was at the lower end of the System 360 Processing Power.

…IBM System/360 Model 30 Computer – CPU (Type 2030), IBM, US, 1964

McDonnell Aircraft Corporation bought the first Model 30, the smallest and least expensive of the three System/360 models shipped in 1965. It could emulate IBM’s older small computer, the 1401, which encouraged customers to upgrade.

Speed: 34,500 adds/s Memory size: 64K Memory type: Core Memory width: 8-bit Cost: $133,000+

Note the memory size of 64,000 bytes vs today’s PC which can handle memories up into the billions. At the time, it was called core memory, and was very expensive. Now you can get billions of byte storage for a few dollars.

Some of the Peripherals that went with this System 360. In the foreground you can see a disk drive. Don’t know the capacity but I know it is less than 20 MB. Disk Space was so expensive and sparse that tape drives were used for most information storage. Tape sizes were 2400′, 1200′ and 600′. Retrieving that information by the CPU was laborious because the information was stored sequentially on the tape. The CPU might have to go back 1000′ or more to get the file the program called for. They would use the disk drives for files extracted from the tape for manipulation and program storage.

Something that I had forgotten until now – “JCL” – Job Control Language for IBM mainframes. It was (and is) almost as involved as a programmatic language. It would contain information for the CPU on where the files were (on tape) and many other things.

I must be getting old, remembering this stuff 😉

IBM Core Memory Cabinet from their Military Products Division for the US Army and US Air Force- SAGE Early Warning System *, 1950s. Core memory – the predecessor to semiconductor memory – was very expensive. While cabinets would contain Kilobytes of memory, (thousands), not Megabytes (millions), or Gigabytes (billions) – common today.

At the time, IBM estimated a large company had 90% of their computer dollars tied up in hardware, and 10% in custom written software. Now it is the reverse, probably even more extreme but let’s say 90% is software costs. I’m talking about custom-written software, not the off-the-shelf software most buy for their micro-computers.

This was one of the revolutions of the micro computer, with such a huge potential class in a user-base, things like a program compiler (software that converts code the human writes in a given language to the code the machine understands into a program file) that would probably be 6 figures (in then dollars) for a mainframe, 5 figures for the mini-computer of smaller businesses, and hundreds of dollars for a micro computer.

That is what has amazed me.

Anyway I can remember with one job in the 80s, I had a boss who had worked for Aerojet during the heady days of the Apollo program, on a mainframe of course. As far as the then small market share of microcomputers in the early 80s, he said that they were “…just toy computers! Nothing will ever come of them!”.

Which was a common attitude among IT professionals of the day. To walk into a “computer room” of a mainframe in the 60s or 70s was usually an awe-inspiring experience. Most of the floors were white, and raised a foot or so to allow all the cables of the massive peripherals like tape drives and disk drives and CPUs, inter-connection. With all of that, you would hear the constant hum of large fans cooling the whole room, taking away all that generated heat. Programmers were considered to be the high priests of the new Information Age. The micro processor fostered an amazing revolution, with the dissolution of some multi-billion dollar computer companies who couldn’t adjust to the massive shift.

Anyway, let’s continue the museum tour. Please realize that we are only scratching the surface here; I took almost 300 pictures (and now lament not taking a few more). I believe there was a quote by one of the Intel founders about his not believing that semi-conductor memory had any future and wasn’t worth patenting but I cannot find it. Unless my memory is flakey (always a possibility) even sometimes the computer revolutionaries couldn’t always see the future!

To publish all these photos (even at my reduced size) and use up valuable disk space would be pushing the hospitality of the Chicago Boyz!

The amazing thing to me was their having actual examples of all of these computers from the ENIAC of the 1940s to the Cray-1 Supercomputer of the 1970s, to “Kitchen Computer” of Dallas-based Nieman-Marcus offered in their famous Christmas Catalog of 1969.

ENIAC

In 1942, physicist John Mauchly proposed an all-electronic calculating machine. The U.S. Army, meanwhile, needed to calculate complex wartime ballistics tables.

Proposal met patron.

The result was ENIAC (Electronic Numerical Integrator And Computer), built between 1943 and 1945-the first large-scale computer to run at electronic speed without being slowed by any mechanical parts. For a decade, until a 1955 lightning strike, ENIAC may have run more calculations than all mankind had done up to that point.

As I was assembling this post, it amazed me how so many huge enterprises were started by talented individuals from their previous companies. They felt stifled and went on to start their own successful ventures, their ideas free now to germinate.

Seymour Cray was one of those individuals. Immensely talented at Control Data, a very successful mainframe company of the 1960s, he designed his super computers at Cray Research. Control Data was one of those “Go-Go” stocks in the 1960s. Investing early in Control Data, like Xerox or IBM, would probably have made one a millionaire.

His Cray-1 and Cray-2 supercomputers were so powerful that they were put on an export-restricted list. They were used for such things as predicting weather patterns or designing nuclear weapons.

BYTES FOR BITES:

THE KITCHEN COMPUTER

Why would anyone want a computer at home? Before the personal computer era and its avalanche of possible uses, the perennial answer was: “to store recipes.”

Neiman-Marcus took that literally. The 1969 Christmas catalog featured the Kitchen Computer. For $10,600 you got the computer, a cookbook, an apron, and a two-week programming course.

Inside the futuristic packaging with a built-in cutting board was a standard Honeywell 316 minicomputer. But the console interface featured binary switches and lights. (Does 0011101000111001 mean broccoli? Or carrots?)

Not surprisingly, none were sold.

Their Christmas Catalogue was legendary. Although I never saw it, one year they supposedly offered a flying saucer.

My nomination for the world’s first PC would be the Altair 8800.

Altair 8800, Micro Instrumentation and Telemetry Systems (MITS), US, 1975

The basic Altair 8800 had only toggle switches and binary lights for input/output. Yet it was the first microcomputer to sell in large numbers: more than 5,000 in the first year. Most customers were hobbyists, who tolerated a primitive interface.

MOORE’S LAW

The number of transistors and other components on integrated circuits will double every year for the next 10 years. So predicted Gordon Moore, Fairchild Semiconductor’s R&D Director, in 1965.

“Moore’s Law” came true. In part, this reflected Moore’s accurate insight. But Moore also set expectations- inspiring a self-fulfilling prophecy. Doubling chip complexity doubled computing power without significantly increasing cost. The number of transistors per chip rose from a handful in the 1960s to billions by the 2010s.

Software A Bit On Unix, Photoshop, Texts and MP3 files…

We cannot complete this post without talking a bit about software! After all, if I may be a bit poetic, it is the software that brings these circuit boards to life! It’s the written operating system, usually millions of lines of code, that brings the machine to life and then the application programs, or “apps” to use the current vernacular, that talk to the operating system and performs the user’s wishes. It is the compiler (itself sophisticated software) that bridges the application programs and the operating system. With a 1-time use, it converts the programmer’s written instructions it to a “machine-readable” file; i.e.., a “program” or “app”.

When I started programming in 1980, there was a friendly debate over the nature of software. Is writing it a science or an art?

The debate continues.

I fall on the “art” side. We application programmers will get instructions on what the “user” wants what kind of information weather reports or financial reports? Finding all the information that can be had on the Net of a desired subject? When the app is being written, we know what the result is supposed to be but how you get that result is up to us! If programming is an art, and compared (as I do) to a painting, it can either be a Jackson Pollack or a Renoir.

And to see a Renoir, or make a Renoir, is a thing of beauty. Other decent programmers can look at a well-written program and the easily-followed logic just flows using the best the language laws of the selected programming language. And there is no one way to write it. You can have 2 programmers writing programs to get the same results, and I can guarantee you that those 2 programs will be different.

Using the Cessna program I mentioned before, that fell into the Jackson Pollack category. Stuff going here and there and everywhere with seemingly no beauty excruciating to follow (although there are Jackson Pollack fans and maybe they would say I simply don’t understand it! Point taken).

I was assigned all those years ago to find a bug in that program and spent 3 days following a particular section of code. At the end of 3 days, tracing all the selected code, I realized that 1,000 lines of code was just “dead”.

It did all this stuff asked of the various programmers and in the end, did nothing with it.

Some of those previous 20- maintenance programmers didn’t really understand the program and just started adding their own code. Like a good gardener, they should have done some pruning!

A well written program is a thing of beauty and if you wrote it, gives you pride. If someone else wrote it, you admire their work.

Anyway.

I came across this at the museum, who agrees with my view:

“Computer programming is an art, because it applies accumulated knowledge to the world, because it requires skill and ingenuity, and especially because it produces objects of beauty”

— Donald Knuth 1974

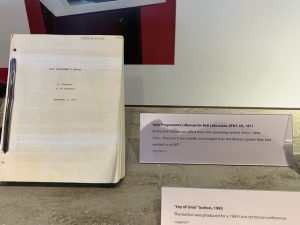

Wherever you are reading this, you are in all likelihood reading it off an Internet server powered by the Unix operating system. Or Linux, a derivative that was started by a Finnish programmer, Linus Torvalds.

Today, it could be called the one universal operating system, running on many different kinds of computers.

And like a lot of revolutionary things, it was invented initially for internal use inside the fabled Bell Laboratories. It eventually became the medium for communication between Internet servers around the world.

The elegant and flexible Unix operating system sprang from Multics, a complex time-sharing operating system developed at MIT in the 1960s. When Bell Labs withdrew from the project, Ken Thompson, Dennis Ritchie, and other Bell researchers created a similar but simpler system for smaller computers.

Bell Labs freely licensed Unix, which was written in C and easily ported to other computers. It spread swiftly.

A Few Origins On Some well-known Applications Today

The Origins of Computer Graphics

If you were interested in computer graphics in the 1960s, you probably kept an eye on Utah. The Graphics Lab at the University of Utah Computer Science Department attracted many early, innovative pioneers. Faculty included Ivan Sutherland, fresh from virtual reality experiments at Harvard, who volunteered his Volkswagen Beetle to be digitized.

Students smothered it in a grid of tape to take measurements. The Lab’s research brought advances in art, computer animation, and graphical user interfaces.

Faculty and alumni founded influential firms such as Silicon Graphics, Evans & Sutherland, Adobe, and Pixar.

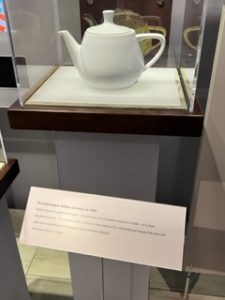

THE UTAH TEAPOT

Computers manipulate data. So, how do you get them to generate images? By representing images as data. Martin Newell at the University of Utah used a teapot as a reference model in 1975 to create a dataset of mathematical coordinates. From that he generated a 3D “wire frame” defining the teapot’s shape, adding a surface “skin.” For 20 years, programmers used Newell’s teapot as a starting point, exploring techniques of light, shade, and color to add depth and realism.

From that research at Utah in the 1970s came my newly-reproduced auto part, that required exact measurements of the old part to give to the 3-D Printer.

Mp3 sound files

I, like millions of others, have changed my music buying habits. The internet and the digital world was the driver. I really enjoy listening to SiriusXM in my car. They have a multitude of channels devoted to a hundred interests among them, music from the 40s, 50s, up the decades through 2010. There’s a channel for each. If I don’t catch the artist and title on the screen, my Shazam app on my iPhone will tell me. That in itself is amazing to me to think that virtually all published music has been digitally saved and available on the Net.

Shazam will “listen” to the music for a few seconds, convert the sound to digital values (for that small musical segment) and then 99% of the time, match the captured digital segment to the title and artist in the library. Then I will go to amazon and look it up and for all of $1.29, download the song where at a later time with other software I make custom CDs for my car (it is an old car!). I’ve used the program anywhere there is music – from a supermarket’s music to the TV to SiriusXM.

I have heard music I haven’t heard in decades from the 50s, and have learned to appreciate the big bands of the 40s.

And through this method, I have 4-5 versions of California Dreaming, written and made popular by the Mamas and the Papas in the 60s. I even have an Italian version I heard on the Paramount + miniseries of The Offer, about the making of The Godfather.

The download from amazon takes a few seconds, thanks to data-compressed mp3 sound files. The files are much smaller than would be found on a factory-produced CD. It was all made possible by a German researcher named Karlheinz Brandenburg. He thought in 1986 music could be delivered over digital phone lines. A week later, he had developed the code that eventually became mp3.

Mp3 files are compact because they discard sounds that are in the music, but inaudible to the human ear. And that is a source of contention (and irritation) with audiophiles. They claim that like a gourmet meal in which one can’t discern every ingredient, these inaudible sounds nevertheless contribute to the meal.

… Hearing combines physical structures that capture sound waves, and neural systems that interpret them. Our brains suppress sounds that follow each other too closely, or are drowned out by louder sounds. MP3s exploit these psychoacoustic principles.

The Mother of mp3

…”Tom’s Diner”

While refining his MP3 code, Karlheinz heard Suzanne Vega’s hit “Tom’s Diner” playing on the radio. He realized that this a cappella song would be nearly impossible to compress successfully.

Nearly.

“Tom’s Diner,” Brandenburg’s worst-case scenario, became his test song and Vega herself got dubbed the “Mother of the MP3.”

MRIs

What is an MRI?

MRI machines use a magnetic field, radio waves, and a computer to detect the properties of living tissue. All body parts have water (and thus hydrogen).

An MRI machine creates a magnetic field around your body, causing the hydrogen atoms, which are partially magnetic, to align with the field. When radio waves are applied quickly, they excite these atoms, causing them to emit a unique electronic signal that sensors record and which MRI software uses to create an image.

Painting the Picture

We think of MRI machines as “hardware”. Yet much of their power lies in their software, which controls the magnetic and radio-frequency pulses, and then transforms this data into images.

Depending on the MRI settings, images can highlight fat, fluid, air, bone, or soft tissue in the patient.

Software Inside

Software is a valuable tool throughout the MRI process. During your MRI, technicians use the software to select imaging parameters and administer pulse sequences. After your MRI, software is used to process your images so the doctors can interpret them.

Software systems also archive and display MRI images, allowing doctors to consult and connect patients to far-flung medical specialists when needed.

The museum gives many other examples of well-known applications that we use today. When developing cell phones, Developers implemented texting in the Global System for the Mobile Communications (GSM) standard. Carriers at the time thought it might only be useful for sending announcements to customers. Developers originally thought cell phones would be used only for…phone calls.

It was Scandinavian young people who started using texting rather than pay for the calls and that’s when the phone companies (at the time) realized that they had another revenue stream. By 2014, it was generating $115 Billion globally.

I guess it took Steven Jobs of Apple to realize the possibilities with a Smartphone. Even I was late to that realization, wondering why anyone would care to have a camera implemented with a phone! Guess that is why I am not a multi billionaire 😉.

Like my blindness towards the potentials of the Smartphone, texting also initially mystified me. I guess it took the Millennials to show me why it was so popular. To borrow some old computer terms into this context, a phone call could be considered Interactive, and Interrupt-driven. You get a phone call, and you stop doing whatever you are doing (the interrupt) to answer the call. (We will forget about voice mail for this purpose).

A text could be considered as batch mode. Like a line of jobs your mainframe would get, that it processes sequentially and at its leisure, you get a text and can then respond to it at your leisure.

Call me a slow learner, but this also illustrates new technology coming and people initially can’t really find the best uses for it.

How Does Photoshop Work?

Photoshop is an inspired marriage of math and art.

Digital pictures like all computer data are simply coded series of 1’s and 0’s. Numbers. Manipulating those numbers changes the way the computer draws the image.

The calculations involved are complex, but Photoshop’s user-friendly interface lets people alter an image without ever seeing the math behind it.

Creating Photoshop

John and Thomas Knoll grew up developing photos in their Dad’s darkroom. So when Thomas got a Macintosh in 1987, it was natural for him to try and improve its photo handling abilities.

The result? A program called Display which he and brother John (employed at special effects pioneer Industrial Light and Magic ) expanded into Image-Pro, forerunner of Photoshop.

The Knoll brothers photography program was promising…but not profitable. They licensed one version to a scanner manufacturer.

The big break came when Russell Brown, Adobe’s art director, became interested. Brown persuaded Adobe to help refine the Knoll’s software. Adobe released the expanded product Photoshop in 1990.

The initial version of Photoshop version 1.0 had 100,000 lines of code. The later ones have over 10 million lines of code.

I found the Computer History Museum to be fascinating, and hope that I have piqued your interest to visit.

03-21-23 Most of the italicized sections of this post were extracted from digital photos my iPhone 13 Pro extracted. Apple’s sophisticated programming looks at all of those pixels and determines what constitutes text – even if the photo was shot at an angle. In what seems to be a microsecond, it highlights that text allowing one to copy and paste it.

Pretty amazing to me – both the computer power and the programming!

** 03-22-23 0152 When I was sent to Army Air Defense School at Ft Bliss TX back in 1972, we learned on this SAGE computer. Apparently it was extremely reliable and used up to 1983. Pretty amazing for a computer system life! As far as its memory capacity, I couldn’t not readily find it but I am sure each cabinet was surprisingly small by today’s standards – under 100KB? When I was sent to Germany, we were under an Air Force detachment and used their own computer.

A bit more on SAGE which in the 1950s was a technological marvel. Many technological innovations from this system were spun off into other products.

03-23-23 2215 I forgot to mention in this post, concerning the marriage of the camera and the smartphone, that as of a few years ago – I read a statistic that more digital pictures are uploaded to Facebook in a year than all the film prints ever made.

When I was there in 2012, one of the displays was a Superman comic book (from 1961) in which Lois Lane tries computer dating:

https://chicagoboyz.net/archives/37982.html

In 1959 I was employed at Douglas Aircraft Company, in the wind tunnel facility across the street from the main plant. My job was to write simple programs on the IBM 650. Since the 650 had only 2000 addressable memory sites and they were on a rotating drum, programing was challenging. My boss, a genius type, wrote a program he called “SOAP,” Symbolic Optimal Assembly Program, which would load a program on the memory drum calculating the rotation speed to allocate locations for the program to run the faster. I spent a lot of time programing the printer’s memory board, which was made up of plugs and wires.

When I used to recreationally fly in the late 70s and 80s I was friends with a man who owned a beechcraft FBO.

He was an interesting character and started out as a graduate from MIT and wrote some of the first compilers for Honeywell corporation

He knew the money was in sales and became a salesman

In those days the salesman received a percentage monthly of every lease.

Then he bought an FBO because he loved flying

But he was saying during those early programming days every byte in memory was precious and if you were not using it you released it.

This is of course using an assembler language

I can remember on that main frame I learned on -the 370/135 that it had in its processor 64K core memory.

Semiconductor memory was just coming out and there was an auxiliary cabinet by a third-party that was about 6 feet tall that had another 256K.

To me that’s been one of the most amazing things with time is the development of cheap memory

And of course main frames in those days were a lot more efficient in their use of what memory they had

One of the most amazing applications I’ve learned while in this group of space enthusiast on Facebook. Many are NASA veterans.

These early computers on Apollo and the moon lander had to do amazing things with a minuscule amount of memory and processing power.

@David they have some pretty amazing exhibits! I love the slide rule/tie clasp from the 1950s. Never did get the hang out of using a slide rule but they did some amazing things with that including developing the SR 71

The working example of the IBM 1401 computer is pretty amazing too. That was the predecessor to the IBM 360 and I can remember that printer was so legendary that into the early 80s there were companies making controllers so you could use that printer on various other makes and mini computers

I asked one of the docents where they get parts for it and he said “eBay!”

Bill,

I have to ask since I cut my C/PM teeth on it in as a kid but do they have an Osborne portable?

Loved that 5 inch screen

Mike – I don’t remember seeing one – but towards the end my feet were killing me and I made the last stations pretty quick. I remember the ad for the Osborne – this svelte model carrying what looked like a small suitcase!

One of the most content-rich posts I’ve seen anywhere in a while. Thanks!

And interesting, on subjects that have shaped my life but on which I’m no expert.

I still wonder why some of these flagship tech giants are so giant. Can’t all be DEI staff.

Random observer

What has amazed me is the rise and fall of so many multi billion dollar corporations who couldn’t find a market with the technological shifts.

There used to be an area of Massachusetts called Route 128

. It’s probably the highway but it signified all these technology companies on that road.

It was sort of the Silicon Valley of New England and pretty much all gone now

Digital equipment corporation used to be the second largest computer maker. They were bought out by Compaq computer who themselves were bought out by Hewlett-Packard

And one of the things that was brought home to me at this museum was even those proponents bringing in this new technology don’t always realize the repercussions

Such as the SMS text for cell phones.

They just put it in as an afterthought and the cell phone carriers thought they might use it once in awhile to notify customers of things

I should’ve taken a picture of that quote but I believe it was from one of the founders of Intel when first hearing about semiconductor memory.

He thought there was no future in it and there was no point in even patenting it.

When the microprocessor first came out people were trying to find a use for it.

I have a friend who I consider in the top percentile of programmers – all self-taught

Much better than me by my own admission

In the late 70s he was working for Xerox – programming one of their printers with a micro processor

Yes Xerox even made main frames at one point as I recall

Anyway he’s trying to convince the management to use this new micro processor for a small personal computer and they weren’t interested

Just like Steve Wozniak tried to do with Hewlett-Packard

Ah, memory lane. Back when shop class involved chipping stone to make flint axeheads [ok, actually in the late 1960’s] in Algebra II we had access to a teletype link to a mainframe at Denver University. We were doing a section on programming, specifically Dartmouth A-BASIC. We would type our programs on the teletype, saving the text. It would be converted to paper punches on a strip of paper on a roll about 1 1/2 inches wide which after it punched we would cut it off and put it into a reader which would send it to the mainframe at DU. The teletype would print the results if we did not screw up.

The next year, I was at the University of Colorado-Boulder studying FORTRAN IV. I probably was one of the last students to program using Hollerith cards typed on an IBM Model 26 keypunch machine. We would punch our program, add header and footer cards to the deck, and take it to the white coated high priests at the huge computer room and leave it to be run and see if we got it right a couple of days later.

My next contact with a computer was in 1981 when I bought a Coleco [yeah, the toy company] ADAM computer and printer; using an audio tape type cassette for removable storage. It mostly was a word processor with a few games, but it was enough to start my sideline of writing.

From there I went to an old un-enhanced Apple IIe, and then to various Windows computers. It is strange how lives get entwined with tech innovations.

Subotai Bahadur

Tom Watson Jr wrote an outstanding memoir titled Father, Son, and Company. I reviewed it here:

https://chicagoboyz.net/archives/42515.html

When I was in high school (1969 I think, or maybe spring of 1970) we had a rep from the Wang Corporation come in and give us a trial of their electronic calculator. It was the size of a desk and had 4 8-digit displays attached by cables as thick as my thumb. The asking price was $4,000.00. The school didn’t buy it.

@Bill L

An Wang had a huge influence in computer development. He invented core memory, the predecessor to semiconductor

Then went on to successful company selling the calculators

Then started making mini computers and the micro revolution killed the company.

@David

I’ll have to read that tomorrow. I’ve always admired company leaders that Bet the company – roll the dice and win with a game changing product

I think it’s on YouTube but there is a history of the development of the 747.

It’s worth seeing because they go through the other possible designs they were considering.

they did it right because the design lasted 50 years

And Boeing decided to build it just on a fly fishing trip with the CEO and the head of PanAm, Juan Trippe.

And at the time that wasn’t even their priority project but the SST

It was draining them so much financially they tried to cut down the number of engineers for the 747. I forget the name of the chief engineer at this moment but he fought back and it was the 747 that saved Boeing.

And Tom Watson Jr has a similar story coming out with the IBM 360

I think the micro revolution almost killed IBM but I think it was Lou Gerstner (sp?) that move their focus to services rather than hardware.

And then one of those historical “what ifs” imagine if IBM had demanded a third or half a share of the fledgling Microsoft in exchange for giving them the rights to distribute MS-DOS.

Without the backing of IBM Microsoft would’ve been another one of those footnotes

This wave was so big they were many who couldn’t see all of the implications

re Wang, the industry changes would have made life difficult & maybe impossible for them in any case, but general opinion is that An Wang greatly reduced their chances by insisting on appointing his son, Fred, to run the company.

“As far as the then small market share of microcomputers in the early 80s, he said that they were “”¦just toy computers! Nothing will ever come of them!”.

Clay Christensen and Michael Raynor, in their book The Innovator’s Solution, argue that this is *usually* the way disruptive innovations start out…they attack from the bottom, and their potential is minimize by incumbents because they aren’t as functional and/or impressive as the existing products. For example, they say, steel minimills were originally limited in the quality of the steel they could produce, which was restricted to applications like rebar. But, over time, they improved their quality and because a serious threat to the vast integrated steel mills of companies like US Steel and Bethlehem Steel.

An old computing manual from CERN that I have around here someplace included a little computing history for background. This included the claim that the JCL that IBM offered was originally used as an internal-only low level control system for developers. The user interface they wanted to provide took longer than expected to develop (surprise!), but the hardware was ready and customers were waiting, so they polished up their internal JCL a bit and offered that–and then of course had to support it ever after.

Bill,

Good point on Boeing and IBM risking the company to innovate the next generation.

Along those lines, since you were touring the Computer History Museum, what do you make of Facebook/Meta and the Metaverse? Seems to me Zuckerberg is risking the company for Metaverse, to get into the next generation. The stock price is getting killed, but is his approach analogous to what you described with IBM and Boeing?

As a side note, there seems to be a meme among 20 and 30-somethings in popular culture regarding risk taking and developing the next big thing, that it is… well not so risky. Sort of Masters of the Universe for the laptop class. I try to explain to them that’s great to risk, both spiritually and sometimes financially, but there is reason why when people and companies take big risks it’s called “betting the farm.”

Also I have noticed the same people have some preconceptions about making “future-oriented “ executive decisions, they seem to feel that the correct choice is obvious and that it is some sort of moral and intellectual failing to choose otherwise. I find their degree in not not only business decisions, but also in regard to history, well… troubling. I blame it on the media’s obsession, both mass and social, to place everything into the realm of stories instead of analysis with the narrative of “you are the one we have been waiting for.” Choices honestly made tend to be far murkier and less clear-cut.

I look over their proposals and decide to take a positive approach in my initial response to them ”¦ “ I like your project and I think it can work, but I have seen this tried before and by better people than you and they failed. Why do you think you are different? What’s your spin?” If they seem somewhat resilient I tell them to be positive and forward-thinking but realize the cemeteries are full of people who thought they were the ones we were waiting for.

With what he is trying to do, I would be more pro-Zuckerberg if it wasn’t for how he gamed the 2020 election and the fact he doesn’t have eyebrows,. I mean he could have just taken some billions and retired.

Meta has been running ads about how virtual reality will have applications in the Real World, such as helping surgeons plan operations in advance. But I believe there already are surgical visualization systems for this applications, and there certainly are (and have long been) virtual reality systems for aviation training, aka flight simulators. It’s not clear that a consumer-oriented company such as Meta/FB has a lot to add to such applications.

There’s betting the company on purpose, where the risks and rewards have been quantified or at least considered. Then there’s betting the company without meaning to. Both far more common and far more dangerous.

The 737MAX belongs to the latter category. Boeing played the FAA like a cheap fiddle but lost track of the reality that outside of North America and Western Europe, there are many places where the qualifications of everyone from the pilots to the cabin cleaners tend toward barely acceptable. It wasn’t “bad luck” that at least two incidents here barely registered while the craters were in Indonesia and Africa. The only thing that saved Boeing was that Airbus couldn’t plausibly supply all the wide bodies in time, so the airlines had to just sit tight and take it..

Betting the company and losing just puts you in bankruptcy court. Books get written about that too.

By promising airline customers a penalty of $1MM (per airplane) if it turned out that additional training was required for the 737 Max, Boeing created a very bad incentive for themselves. The existence of this penalty seems like a probable reason why MCAS was not properly documented in the Pilot’s Operating Handbook.

As a now-retired electrical engineer I lived and worked in the SF Bay area for 2014-15-16. Did everything you mentioned. Multiple times. Then I realized I was living in California and fled. Just like lots of other people.

My father was in the Navy for WW II and I remember him mentioning the Mare Island Yard. Took a tour – nothing there now. If fact, the US Navy presence has been completely wiped out of the SF Bay. Even the decommissioned ships of “mothball fleet” at Suisun Bay are gone.

Steve Jobs and Bill Gates were born 1955. I was born in 1957 so we are contemporaries. When I went to the CMH I saw plenty of things I used (suffered through?) during my career. I have the T-shirt that spells out CMH but in ASCII. I earned respect from the guy at the front desk by knowing that’s what it was.

Computers – first used to count the population by a priesthood of experts. Now used to obscure voting to the point that only a priesthood of experts can perform an audit. So I guess we’ve come full circle.

@Locomotive – The Bay Area is funny. Yes, very anti Navy. When the rounds for base closures came, California was especially hit. The Sacramento area had 3 – the Army Depot, Mather and McLellan. All Closed. SF – all closed. Of courwe it could be said that we had far more bases – but I also heard it said that many of the Admirals and Generals responsible remembered Vietnam and the demonstrations (particularly the Bay Area) – and that was a factor.

Then too I can’t imagine trying to attract new people to come here and live when housing is so unaffordable. My friend and I were saying that for us long-=term Californians, we couldn’t afford to live in the houses we grew up in. Her house in Palo Alto recently sold for $10 million. And the new owner has completely gutted the inside (it is designated a landmark and the exterior can’t be touched) – he will wait 2 years before he can move in. There is a lot of money there.

I grew up in Studio City where the rise wasn’t so dramatic but still in the millions.

We went back during “Fleet Week” to see the Blue Angels – despite what you hear there were thousands who turned out on a park in Tiburon to see them. That day they had to cancel because of a fog bank over the bay.

There is a great essay by a native San Franciscan on why her beloved city is dying – and what can be done to turn it around – in short it is a war between the liberals and the leftists

https://www.theatlantic.com/ideas/archive/2022/06/how-san-francisco-became-failed-city/661199/

@Mike – I’ll have to read more on Zuckerberg. I don’t much care for him for the reasons you mentioned – and he did use his resources to put his thumb on the election. My friend doesn’t like him because she claims that he moved to Malaysia to escape taxes.

Technically I’m not all that impressed with Facebook – all in all it is just an enhanced version of the BBS – Bulletin Board System – if the 1980s. Plus it is full of fraudsters.

I made the observation to a pretty and young receptionist yesterday (are there any other kind?) that only on Facebook am I beset by young “20-something women” on Facebook who find my sense of humor irresistible and will I please “friend” them?

Which brought a laugh (I am 72).

I drove around the complex and honestly it seemed to be about the most depressing place to work – imagine a Soviet era concrete apt complex and multiply that by over a mile of the same structures – and a half-mile wide. Paint an occasional wall a bright color and there you have the Facebook complex by the Bay.

Driving back, we saw sometinkg in the traffic morass on US 101. I have been trying to figure out the best way to go back home during rush hour and 101 isn’t the way. Took us 4 hours to traverse 30 miles from Mtn View to over the Bay Bridge and Berkeley. (I think I680 going up the East Bay is better).

Anyway I saw these luxurious black busses – windows tinted, double decker – like European tour busses. 1, then another, must have seen half dozen. Finally figured out those are the “Google buses” that bring these tech millionaires back to SF (and further drove up real estate).

If you read the Nellie Bowles piece SF in the residential must be surrealistic – multi million dollar homes and dead addicts around the block.

Heh, very interesting!

I got bit by the computer bug while in HS after attending a short seminar on computer programming (a weekend learning a little bit of FORTRAN) and running programs on a 1401. Went to college in Minnesota and learned programming on a UNIVAC 1100 series. I punched a few cards when it helped to get assignments run ahead of the newbie freshman that only wanted to use the DEC minicomputer front end. My first job was at an insurance company in Iowa that was running a 370 and 4341 (DOS/VSE coupled via VM) before we switched over to a 3080 running MVS. Oh what a joy that conversion was as IBM hadn’t discovered upward compatibility yet. Most of my career has been in IBM mainframes, with a detour into AS/400s (the successor the IBM S/36 and S/38 machines) for a while and a transition from applications to the systems side of the processor.

The demise of the mainframe has been predicted literally my entire career, and yet here I am coding and running a few programs this morning in zOS/REXX and executing in batch with JCL. I expect to be hanging up my card punch in a year or two, though. IBM shot themselves in the foot by not keeping people with mainframe skills in the employment pipeline, and now no one either wants to work on them or really understands their capabilities. I guess the only thing constant is change.

Great post! Brings back a lifetime of memories, encapsulates much information. Thank you for this.

Bobby thank you! It was a pleasure writing it and letting you all see it vicariously

@Christopher some weeks ago when we were driving around in Google Ville one of the Google people was sort of laughing about COBOL.

To the current Young programmers if not a joke all past history

And yet as you said there’s plenty of legacy systems still running it and I made the joke to the Google people when you’re looking for COBOL programmers it’s all old guys like us

A years ago I considered getting work for the California DMV and actually it was an old COBOL mainframe system

I would hate to have to look at that code that is 40 years old and maintained by countless programmers

I used to hate JCL – I never really got the hang of it

I just learned enough to know what I have to do the execute the program.

In fact it is important enough that I can remember on resumes people would acknowledge their familiarity with JCL

There’s still a demand for us old fossils with our old skills :-)

I read somewhere that the oldest program still running is a DoD contract management program, written in COBOL.

I expect a lot of the problem with Cobol-era experts retiring isn’t so much the language itself…any good programmer could probably learn Cobol in a month or so if he put his mind to it…but undocumented knowledge of how a particular system actually works, which modules do what, etc.

“but undocumented knowledge of how a particular system actually works, which modules do what, etc.”

That’s as likely for a program written six months ago as one written 60 years ago. How likely do you think it is that these companies doing mass layoffs have any real idea what’s walking out the door? The fact that they’ve suddenly noticed they have thousands of useless employees doesn’t inspire confidence in their management process.

Some sad news:

https://nypost.com/2023/03/25/intel-co-founder-gordon-moore-dead-at-94/

On the subject of nerds of yesteryear. When I was in high school, Class of ’72, certain of the, always male, students wore slide rules on their belts like a weapon. Most opted for the 12″ but the truly elite went for 18″. I made do with a 6″ aluminum Pickett in my shirt pocket. Not sure what that says about me other than my eyes were better then.

I never did get the hang of using a slide rule. Must be a Zen thing. It pretty much got us to the moon and designed the SR-71.

Interesting feature about the slide rule is that it does not produce the answer — instead, it provides the digits for the answer. Say, 472.

Now, does this answer represent 472 Million or 0.472? The slide rule user had to develop a sense of the expected scale of the answer — which was probably a very useful skill for an engineer. It prevented the modern problem of the totally ridiculous answer which comes from fat fingers on the calculator’s keyboard.

A slide rule will give about 3-1/2 digits of precision. For an awful lot of things, that one part in about 3,000 is enough for most things in the real world. Your starting data is rarely much better than one part in a hundred, again in the real world. This quickly breaks down if there isn’t an analytic solution or near approximation and you need extended calculations for a numerical approximation.

The rules of numerical precision don’t seem to be something most people pay much attention to any more, certainly not journalists. A good rule is to ignore anything beyond the first two digits unless you know they’re significant.

The real advantage was the hours of time you could save not having to look up logarithms, trig functions and such. Publishing those tables is another lost industry. A 13 place trig table was the size of a large dictionary.

Wang Computer Company is a case study in business school literature. It has been said that An Wang ran the company like a Chinese family, in that his son (Fred) was put in the top slot for traditional family reasons, despite a lack of managerial ability and interest in the technical side.

FWIW, Wang also came out with the first word processor.

I confess a fondness for the Wang desk calculators in the 300 series. They first introduced me to RPN (reverse Polish notation), led me (and many others) to resonate with HP’s calculators. I still have on my desk and use the HP-35 I bought in 1973.

There’s a good book about Wang Labs, “Riding the Runaway Horse”, by Charles Kenney.