Charles Murray, quoting himself and Richard Herrnstein from The Bell Curve:

In sum: If tomorrow you knew beyond a shadow of a doubt that all the cognitive differences between races were 100 percent genetic in origin, nothing of any significance should change. The knowledge would give you no reason to treat individuals differently than if ethnic differences were 100 percent environmental. By the same token, knowing that the differences are 100 percent environmental in origin would not suggest a single program or policy that is not already being tried. It would justify no optimism about the time it will take to narrow the existing gaps. It would not even justify confidence that genetically based differences will not be upon us within a few generations. The impulse to think that environmental sources of difference are less threatening than genetic ones is natural but illusory.

In any case, you are not going to learn tomorrow that all the cognitive differences between races are 100 percent genetic in origin, because the scientific state of knowledge, unfinished as it is, already gives ample evidence that environment is part of the story. But the evidence eventually may become unequivocal that genes are also part of the story. We are worried that the elite wisdom on this issue, for years almost hysterically in denial about that possibility, will snap too far in the other direction. It is possible to face all the facts on ethnic and race differences on intelligence and not run screaming from the room. That is the essential message [pp. 314-315].

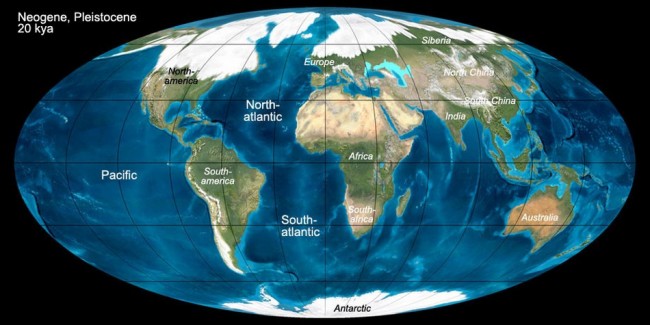

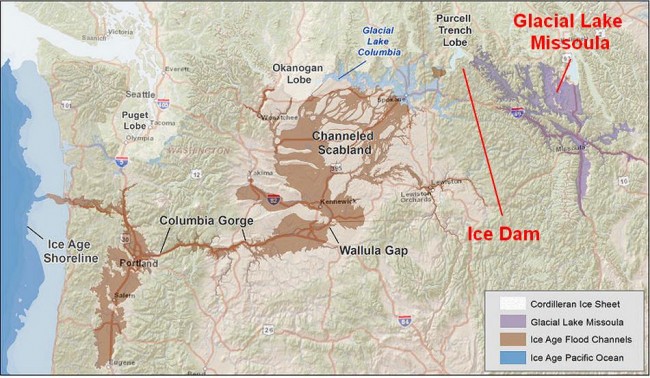

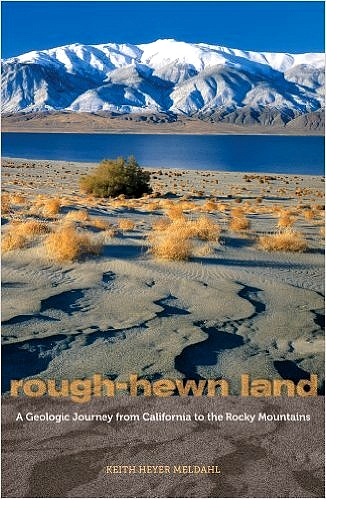

Keith Meldahl, a geologist and professor of geology, has written one of the most interesting

Keith Meldahl, a geologist and professor of geology, has written one of the most interesting